New FaceCore Face Engine

With major release 2.0.0, a replacement for the OpenSceneGraph-based face engine was released for the Furhat robot and SDK. The new Unity-based face engine, called FaceCore, offers a lot of benefits and should be considered the default when building new skills. It is also simple to migrate to the new face engine from old skills!

Why should I switch to the FaceCore face engine?

The new face engine, FaceCore, is a significant upgrade of the facial animations on the Furhat robot. The new Face Engine is based on industry standard tools such as Unity and ARKit. It currently includes:

- More parameters (blendshapes) for more detailed control of facial expressions, including assymetrical gestures.

- Improved lighting (shadows, eye reflections, etc)

- Improved quality of the face (textures) on the robot

- Brand new set of faces known as characters

- Character editing parameters - temporarily modify the size of lips, position of eye brows, etc

- Since the same graphics engine and models will be used for both the Virtual Furhat and robot faces, any discrepancies between the two will be minimized

- Capture of facial expressions via ARKit using a recent iPhone

FaceCore replaces the existing OpenSceneGraph (OSG) implementation, but you will be able to switch between FaceCore and the old face engine at will to ensure compatibility with old experiences you create.

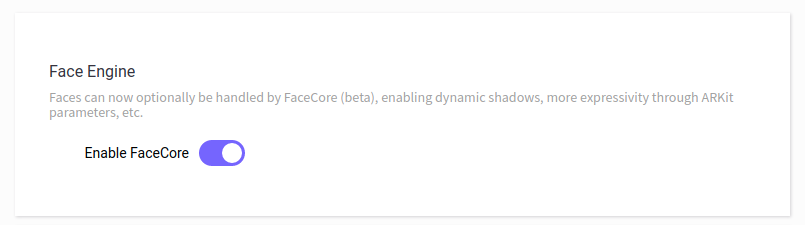

Activating FaceCore

- Start by upgrading your robot to 2.0.0 or later.

- Open the web interface and toggle the switch to enable FaceCore in Settings > Face. Restart the robot again when asked to do so.

- Calibrate the face further down on the Face settings page.

Deactivating FaceCore

If you wish to go back to the old face, you can simply reset the FaceCore toggle under settings and restart the robot, like you did when activating the feature. This may be useful if you have older skills that you have used for specific research, for example.

Building skills for use with FaceCore

Most of what you already know is backwards-compatible, but to make the most of future skills that you create, it is recommended that you use the revised functions as listed on this page going forward.

Masks and Characters

Instead of models and textures, the user is now presented with a choice of masks and characters. A character does contain textures, and is for most purposes interchangeable, but also allows for modifications to the underlying 3D geometry and the addition of overlays to provide even more varied appearances. To keep the new naming consistent, users are recommended to use setMask() and setCharacter() instead of setModel() and setTexture() in their skills. For the character selection, you can access the official characters through enums (e.g. furhat.setCharacter(Characters.Adult.Isabel)), as well as through the name directly (e.g. furhat.setCharacter("Isabel")). Note that the shortform furhat.character = "Isabel" is also possible.

Additionally, you can use the following helper functions in Kotlin skills to programmatically see the current face and the available options:

getCharacter()- returns the current charactergetCharacters()- returns the available characters for the current maskgetMask()- returns the current maskgetMasks()- returns available masksgetFaces()- returns a map with the mask as the key with the available characters

Gestures

To use the new blendshapes, you can build gestures using ARKitParams, which can be combined with the existing BasicParams. ARKit is Apple's suite of augmented reality tools, in which a standardized set of blendshapes have been created to facilitate interoperable software solutions. You can find Apple's documentation of these blendshapes here. The Furhat implementation of these blendshapes, the ARKitParams, have a valid range of 0.0 to 1.0. It is also possible to alter the appearance of the face's underlying geometry using CharParams, our own system for creating a variety of appearances.

Both ARKitParams and CharParams are compatible with all mask models that are not marked with "[legacy]". Apart from the neck movements and combined expressions, the BasicParams are now deprecated, and use of ARKitParams is encouraged. You can also use these blendshapes with the Remote API.

Note: The anime [legacy] mask supports most of the BasicParams, but some blendshapes will not work correctly. In the future, we hope to provide more ARKitParam-enabled characters for the child and anime mask.

import furhatos.gestures.ARKitParams

frame(0.10) {

BasicParams.EYE_SQUINT_LEFT to 0.8

BasicParams.EYE_SQUINT_RIGHT to 0.5

ARKitParams.JAW_LEFT to 1.0

ARKitParams.MOUTH_DIMPLE_LEFT to 1.0

BasicParams.NECK_PAN to -15

}

Note that you can check whether the FaceCore face engine is enabled from within your Kotlin skill code using furhat.isFaceCoreActive(), for example to trigger a spoken warning to enable FaceCore for the best possible experience, or to select different appearances (for example a similar looking FaceCore character replacing a legacy OSG texture). Note that you can not switch the face engine programmatically from within a skill, since this requires rebooting the robot.

Check this video out for a demonstration on how the new blendshapes can be used.

ARKitParams blendshapes

You can see the full list of available ARKitParams below, separated by category:

Brow

- BROW_INNER_UP

- BROW_OUTER_UP_LEFT

- BROW_OUTER_UP_RIGHT

Eyes

- EYE_BLINK_LEFT

- EYE_BLINK_RIGHT

- EYE_LOOK_DOWN_LEFT

- EYE_LOOK_DOWN_RIGHT

- EYE_LOOK_IN_LEFT

- EYE_LOOK_IN_RIGHT

- EYE_LOOK_OUT_LEFT

- EYE_LOOK_OUT_RIGHT

- EYE_LOOK_UP_LEFT

- EYE_LOOK_UP_RIGHT

- EYE_SQUINT_LEFT

- EYE_SQUINT_RIGHT

- EYE_WIDE_LEFT

- EYE_WIDE_RIGHT

Mouth

- MOUTH_CLOSE

- MOUTH_DIMPLE_LEFT

- MOUTH_DIMPLE_RIGHT

- MOUTH_FROWN_LEFT

- MOUTH_FROWN_RIGHT

- MOUTH_FUNNEL

- MOUTH_LEFT

- MOUTH_LOWER_DOWN_LEFT

- MOUTH_LOWER_DOWN_RIGHT

- MOUTH_PRESS_LEFT

- MOUTH_PRESS_RIGHT

- MOUTH_PUCKER

- MOUTH_RIGHT

- MOUTH_ROLL_LOWER

- MOUTH_ROLL_UPPER

- MOUTH_SHRUG_LOWER

- MOUTH_SHRUG_UPPER

- MOUTH_SMILE_LEFT

- MOUTH_SMILE_RIGHT

- MOUTH_STRETCH_LEFT

- MOUTH_STRETCH_RIGHT

- MOUTH_UPPER_UP_LEFT

- MOUTH_UPPER_UP_RIGHT

Cheeks, jaw, and nose

- CHEEK_PUFF

- CHEEK_SQUINT_LEFT

- CHEEK_SQUINT_RIGHT

- JAW_FORWARD

- JAW_LEFT

- JAW_OPEN

- JAW_RIGHT

- NOSE_SNEER_LEFT

- NOSE_SNEER_RIGHT

CharParams facial offsets

These gesture parameters can be used to animate temporary changes to the face, such as bulging eyes. You can now also use CharParams in addition to ARKitParams and BasicParams on the ARKit compatible characters.

Here is the full list of available parameters:

Brow

- EYEBROW_DOWN

- EYEBROW_LARGER

- EYEBROW_NARROWER

- EYEBROW_SMALLER

- EYEBROW_TILT_DOWN

- EYEBROW_TILT_UP

- EYEBROW_UP

- EYEBROW_WIDER

Eyes

- EYES_DOWN

- EYES_NARROWER

- EYES_SCALE_DOWN

- EYES_SCALE_UP

- EYES_TILT_DOWN

- EYES_TILT_UP

- EYES_UP

- EYES_WIDER

Mouth

- MOUTH_DOWN

- MOUTH_FLATTER

- MOUTH_NARROWER

- MOUTH_SCALE

- MOUTH_UP

- MOUTH_WIDER

- LIP_BOTTOM_THICKER

- LIP_BOTTOM_THINNER

- LIP_TOP_THICKER

- LIP_TOP_THINNER

Cheeks, jaw, and nose

- CHEEK_BONES_DOWN

- CHEEK_BONES_NARROWER

- CHEEK_BONES_UP

- CHEEK_BONES_WIDER

- CHEEK_FULLER

- CHEEK_THINNER

- CHIN_DOWN

- CHIN_NARROWER

- CHIN_UP

- CHIN_WIDER

- NOSE_DOWN

- NOSE_NARROWER

- NOSE_UP

- NOSE_WIDER

Although the CharParams are meant to be used as temporary offsets from the currently applied character settings, you can set these to be persistent in a gesture, essentially creating a custom character in addition to the built-in ones.

BasicParams blendshapes

These gesture parameters are supported on both OSG and FaceCore. We include them for legacy compatibility, and they are additionally used for e.g. lipsync and head movements. Generally, it is recommended to use ARKitParams whenever possible.

Here is the full list of legacy parameters:

Composite

- EXPR_ANGER

- EXPR_DISGUST

- EXPR_FEAR

- EXPR_SAD

Neck movement

- NECK_TILT

- NECK_PAN

- NECK_ROLL

Lipsync

- PHONE_AAH

- PHONE_B_M_P

- PHONE_BIGAAH

- PHONE_CH_J_SH

- PHONE_D_S_T

- PHONE_EE

- PHONE_EH

- PHONE_F_V

- PHONE_I

- PHONE_K

- PHONE_N

- PHONE_OH

- PHONE_OOH_Q

- PHONE_R

- PHONE_TH

- PHONE_W

General/other

- SMILE_CLOSED

- SMILE_OPEN

- SURPRISE

- BLINK_LEFT

- BLINK_RIGHT

- BROW_DOWN_LEFT

- BROW_DOWN_RIGHT

- BROW_IN_LEFT

- BROW_IN_RIGHT

- BROW_UP_LEFT

- BROW_UP_RIGHT

- EPICANTHIC_FOLD

- EYE_SQUINT_LEFT

- EYE_SQUINT_RIGHT

- LOOK_DOWN

- LOOK_LEFT

- LOOK_RIGHT

- LOOK_UP

- LOOK_DOWN_LEFT

- LOOK_DOWN_RIGHT

- LOOK_LEFT_LEFT

- LOOK_LEFT_RIGHT

- LOOK_RIGHT_LEFT

- LOOK_RIGHT_RIGHT

- LOOK_UP_LEFT

- LOOK_UP_RIGHT

- GAZE_PAN

- GAZE_TILT

Recording gestures using an iPhone

The new gesture blendshapes are built to support ARKit, so to ease the creation of natural gestures, a tool has been created to convert a face recording to a Furhat-compatible gesture, saved as a JSON. To do this, you need to have a recent model iPhone, from 10 and above. More information can be found on the page for the Furhat Gesture Capture Tool.

Fading in/out the face

By using furhat.setVisibility(showFace[, durationInMs]), the face can be faded to/from black. For example, this can be useful to minimize the audience's focus on the robot while showing slides during demonstrations or to create a smoother transition between different characters being acted by the robot. This functionality is also available using the Remote API on the /furhat/visibility endpoint.

Known issues and limitations

-

If the characters are switched rapidly, this can cause weird states. Usually you can repair the state by switching characters again.

-

On the SDK, fading doesn't work very well, but a solution is yet to be found.

-

The graphical processing power needed to run the new Virtual Furhat has increased, in part due to the improved lighting. If your computer is struggling, you can try resizing the window, so that a fewer number of pixels have to be rendered. If need be, we will consider adding graphics options. Some flavors of Linux appear to lock in a full-screen mode once it has been set.

-

Using an external monitor together with FaceCore currently breaks touch input. As a workaround, you can either load up the web interface through an external device (e.g. iPad or laptop), or switch to the legacy OSG face engine.

If you encounter any issues that you think we should know about, don't hesitate to send us a support ticket!