Furhat Gesture Capture Tool (Beta)

The Gesture Capture tool can be used to create life-like and expressive facial expressions, gaze, or lip movements that can be played out on the robot. The tool converts a recording of a face from a motion capture toolkit into a gesture that can be played on the robot. It can create gestures that can be incorporated into the robot's automatic behaviour or it can create full responses incorporating speech, lip-, eye- and head movements.

The tool is currently released as a Beta and may undergo major changes before a fully supported version is available.

Input: a .csv file generated by the iPhone app Live Link Face

Output: a Furhat gesture file in .json format.

The tool requires an iPhone and the Live Link Face app that makes use of the ARKit that is supported by the FaceCore face engine in the Furhat Platform (available in version 2.0.0 and later). The Gesture Capture Tool translates these parameters into the ARKitparams in the Furhat Skill Framework.

Download the Gesture Capture Tool (Beta):

v0.0.7 - Windows 64-bit - Linux 64-bit - MacOS

v0.0.6 - Windows 64-bit - Linux 64-bit - MacOS

Note that you may need to install Java 11 runtime environment (JRE).

Download the Live Link motion capture app

You need to first download the Live Link Face app on iPhone X or newer model. It is freely available from the App Store.

Unfortunately, we currently do not have any tools to support facial recording on Android phones.

Recording a gesture

Next, record the facial expression using the Live Link Face app. Note that if you want to record your head movements as well, you have to activate 'Stream head rotation' in the settings of the Live Link Face app. After recording, transfer the data generated by the app to your computer. There will be a folder containing a .csv file, a .mov file (with a video of your recording), and two more files.

Turn the captured face into a Furhat gesture

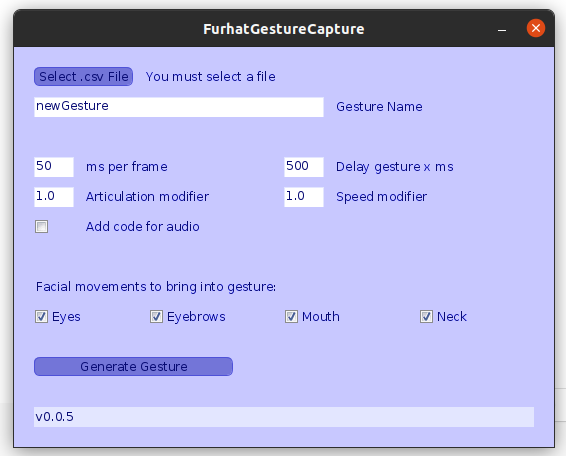

Run the Gesture Capture Tool and open the .csv file of your captured facial expression.

To make a gesture with default settings, just click Generate Gesture.

A .json file with the specified gesture name you entered will be created and exported to the directory of the Gesture Capture Tool. (Note: if you are working on a Mac and no .json file has been created, move the tool to a different folder and try again!)

Settings

There are some settings you can edit.

-

Gesture Name - This determines the name of the gesture and

.jsonfile. -

ms per frame - The minimum milliseconds between frames. A value of 50 here will create a framerate of slightly under 20. A value of 100 will create a frame rate of slightly under 10

-

Delay gesture x ms - This is an offset value that adds some time before the gesture starts. If the gesture starts at frame zero, it will begin in a sudden and not very organic way. A value of 500 means half a second of easing in to the gesture.

-

Articulation modifier - All parameters for facial movements (everything except for neck/head movements) will be multiplied by this value. A value of 1.2 means the gesture will be more exaggerated. A value of 0.9 means it will be less articulated

-

Speed modifier - This will affect the speed of the gesture. A value of 2.0 will make it twice as fast. 0.5 means half the speed. If you want to synchronize the gesture with sound, this is recommended to leave at 1.0

-

Facial movements to bring into gesture - These four checkboxes all default to being checked. Unchecking one of these will ignore those facial features when the gesture is created. So unchecking the Eyes box will mean that the generated gesture will contain all the facial features except for the eye movements (including blinking). Unchecking the Neck means there will be no neck/head movements built in to the gesture.

-

Add code for audio - This will add a frame right at the beginning of the json file which will make a sound file play from the resources folder (defaulting to subfolder audio). You have to manually put the sound file into the resources folder. You can if you want use the .mov file which was generated by Live Link Face and export the audio from it using your favorite video editor, and then convert that audio file into .wav. You can change the file name and location as well as the time (to sync the audio with movements) as you like once the

.jsonis imported into IntelliJ.

{

"time": [

0.5

],

"persist": false,

"params": {},

"audio": "classpath:audio/soundFile.wav"

}

Using the gesture

Open your skill in IntelliJ and then move the .json file you just generated into the resources folder of this skill. You can now create a gesture from this .json like this:

val testGesture = getResourceGesture("/newGesture.json")

This will allow you to use the gesture throughout your skill just like any other gesture:

furhat.gesture(testGesture)

A word on Microexpressions

Gestures that include gaze or head movements can interfere with the autobehaviour of the robot and the gesture might not look exactly the way you want. You should consider to temporarily turn off Furhat's auto-behavior before running the gesture using:

furhat.setDefaultMicroexpression(blinking = false, facialMovements = false, eyeMovements = false)

And turn it on again after using:

furhat.setDefaultMicroexpression(blinking = true, facialMovements = true, eyeMovements = true)